Lecture 8: BLAS, Roofline Model, Strassen’s Algorithm¶

(Recorded on 2022-04-21)

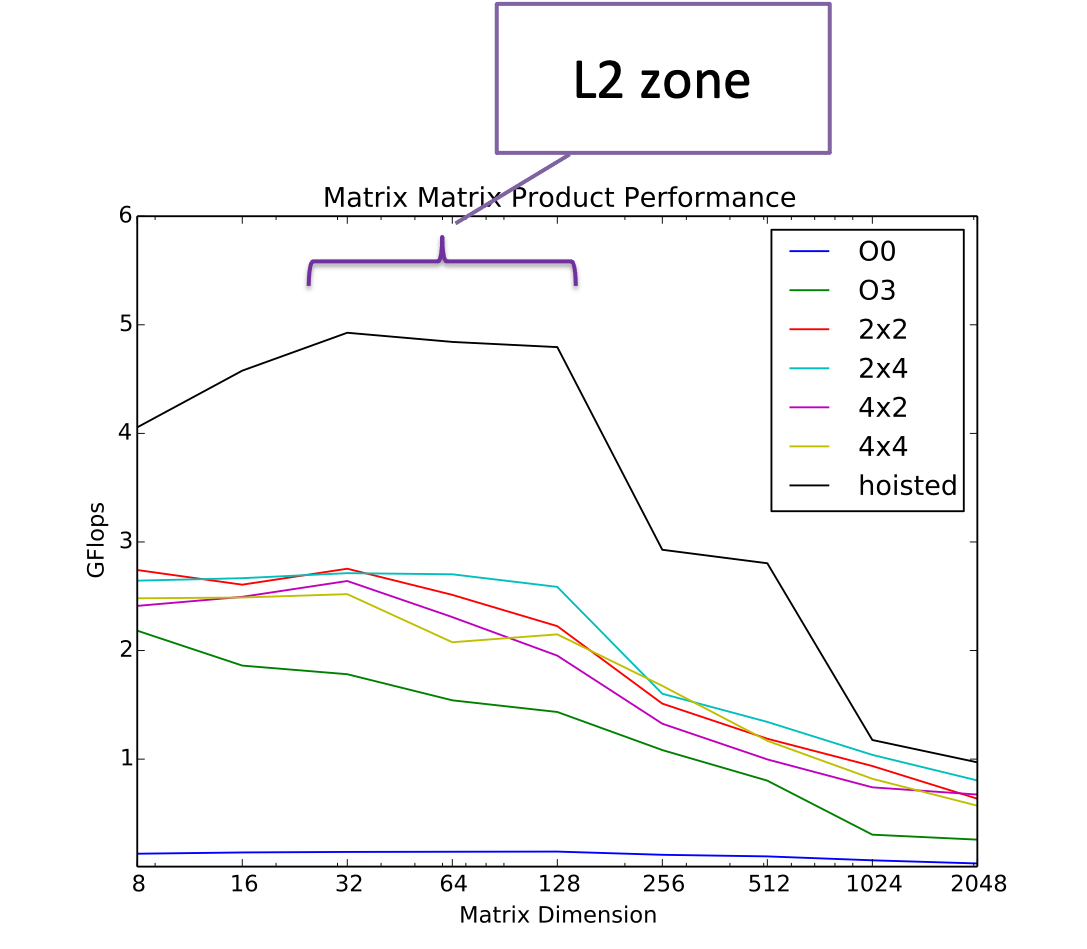

In this lecture we continue our topics in matrix-matrix product. We first recap a sequence of optimizations including hoisting, unroll-and-jam (aka loop unrolling), cache blocking (aka tiling), and transpose block copy. (Different loop orderings will be left for a problem set.) We also recap performance optimizations by investigating SIMD extensions (in our examples, on Intel where they are now known as AVX).

The combination of all of these optimizations results in performance of over 13 Gflops on a low-end laptop. Without the optimizations, performance was one two two orders of magnitude slower.

BLAS¶

We first introduct Basic Linear Algebra Subprograms (BLAS). Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations. It includes a set of core/kernel algorithms for numerical linear algebra. It was originated from a Fortran linear algebra library. But now it has both C bindings and Fortran bindings. It supports four precisions: single, double, single complex, double complex (“s”, “d”, “c”, “z” prefixes).

There are three levels of operations supported by the BLAS functionalities: level-1, vector-vector operations; level 2, matrix-vector operations; level 3 matrix-matrix operations.

The Roofline Model¶

By now you have probably noticed there are two things that come into play when trying to estimate the performance or the efficiency of a computation: how fast the arithmetic operations can be done – and how fast the data can be supplied to the arithmetic unit to do the operations. We know we can never compute faster than the maximum rate of the processor, but we also know we can’t compute faster than the memory subsystem can feed the processor. How do we know which bound to use and how do we use it?

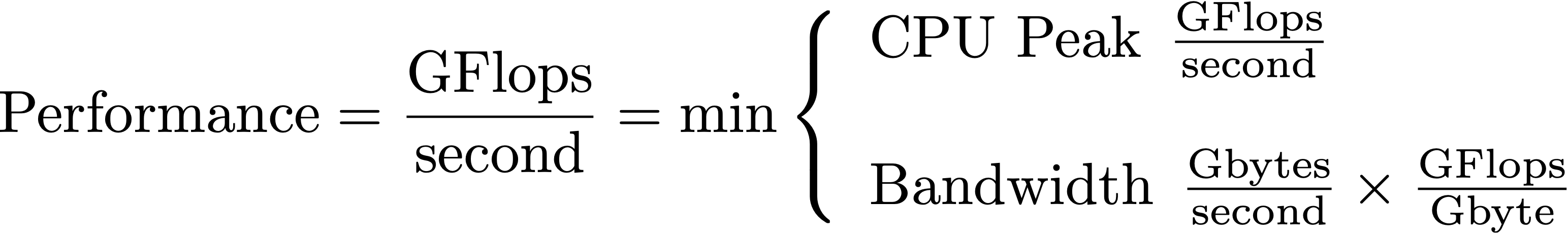

The roofline model was developed by computer scientists at UC Berkeley to address exactly this problem. The key insight in the roofline model is the notion of “arithmetic intensity” of a computation. That is, how many flops per byte are performed by the computation. The maximum performance measured in GFlops/sec is thus the maximum of the computing rate or the bandwidth times numerical intensity:

Peak performance and bandwidth thus give us two lines on a chart that plots GFlops/s vs numerical intensity. One line, CPU peak is independent of numeric intensity and so is horizontal. The other line is the ratio between GFlops/s and numerical intensity, i.e., bandwidth, which produces a sloped line on the chart.

We in fact have multiple lines of each kind to choose from. Max CPU performance depends on whether AVX instructions are being used, whether fused multiply-add is being used, for example. Bandwidth depends on where the data are in memory. Bandwidth to L1 caches is much higher than bandwidth to L2 cache, which is much higher than bandwidth to main memory. Each of these regimes deserve a line on the roofline model chart.

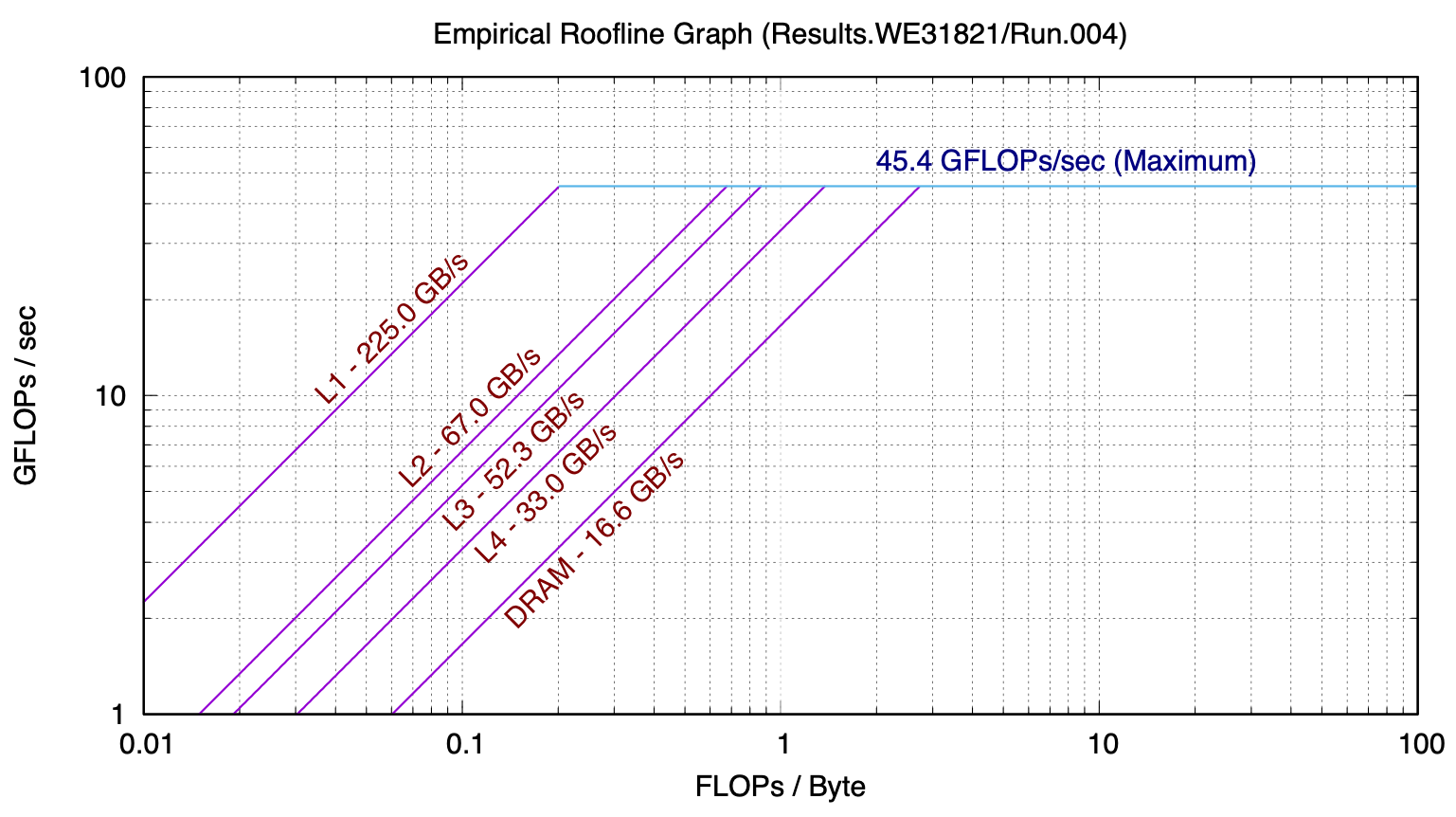

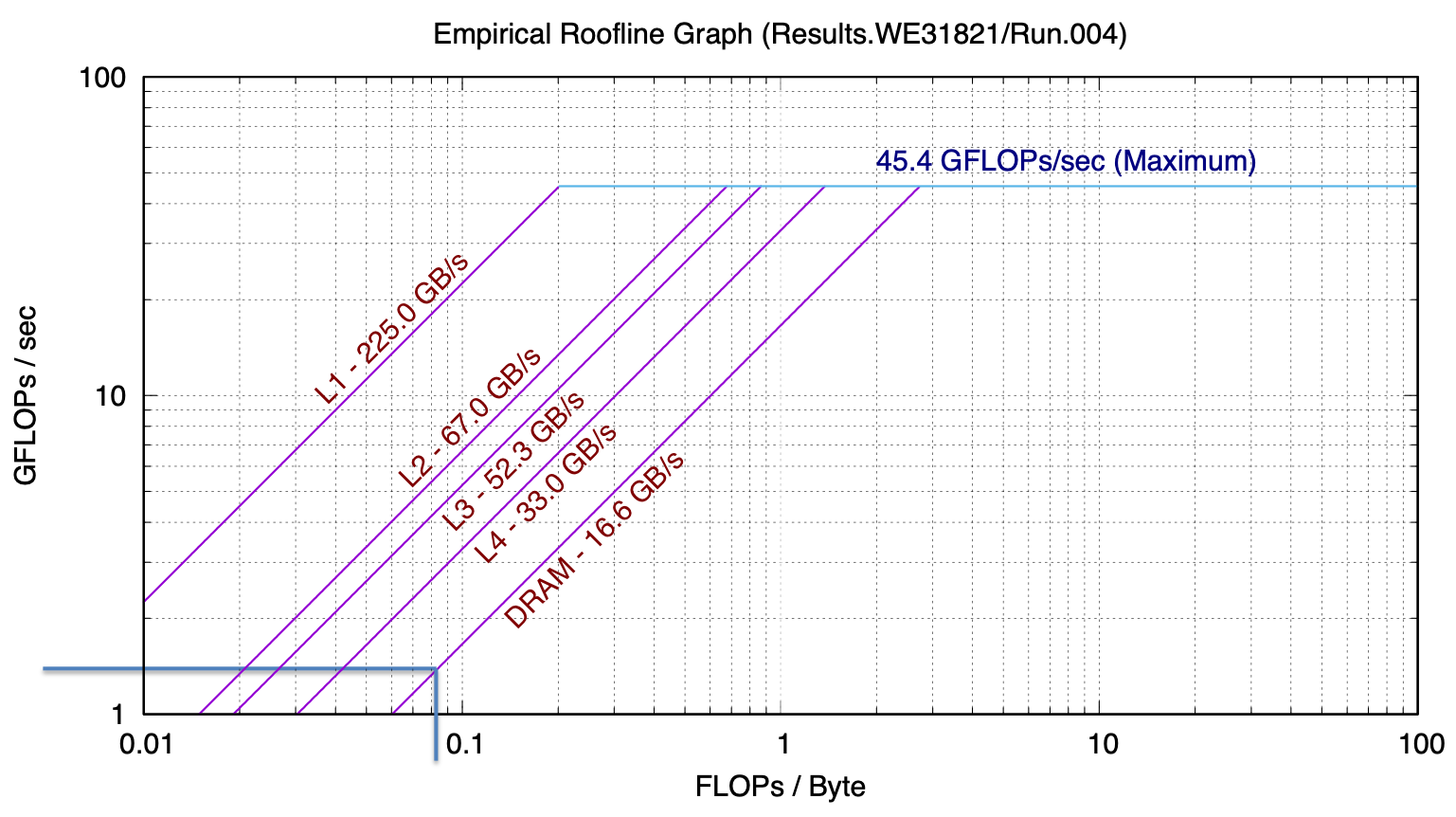

The following is a roofline model for a recent model MacBook Pro, gathered with Berkeley Lab’s Empirical Roofline Toolkit:

This model, while seemingly very simple, has strong predictive power.

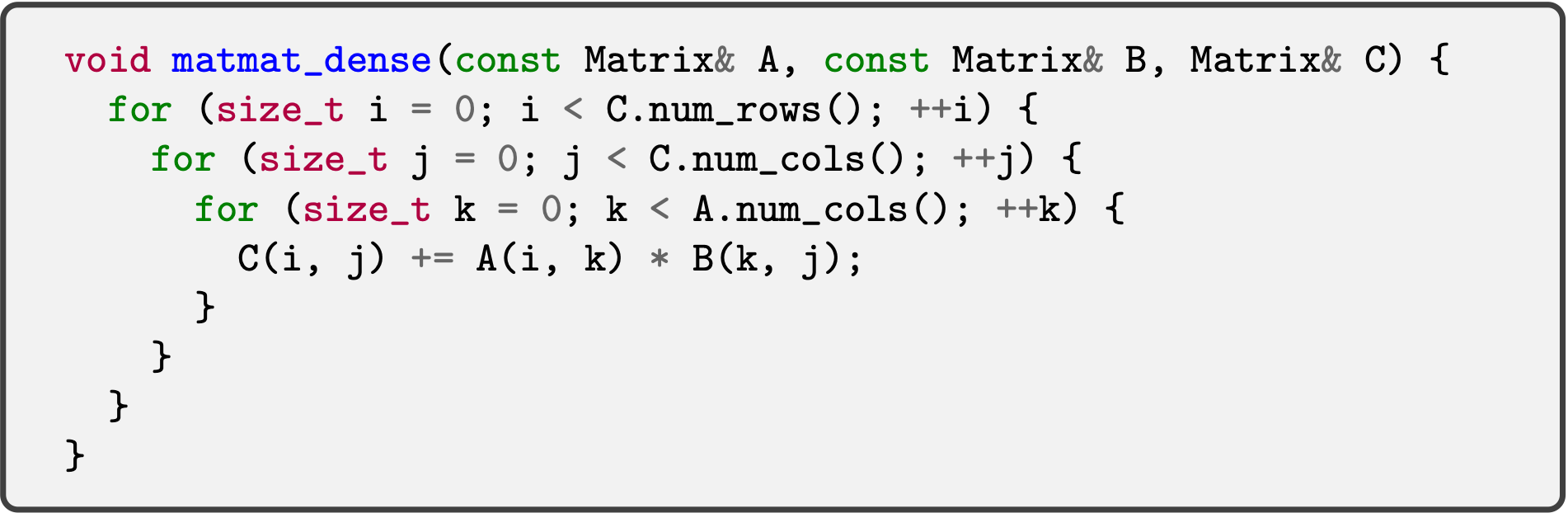

Consider the case of the algorithm we have been studying – matrix-matrix product.

If we count the flops and bytes we see that the basic algorithm with no reuse has a numeric intensity of 1/12 flop/byte. We can use the ert chart to predict how many GFlops/second we would see from that. Let’s consider the case of a large problem, where, if there is no reuse the data would reside in main memory. Then we can see where the DRAM line intersects 1/12 on the x-axis – about 0.4 GFLOP/s

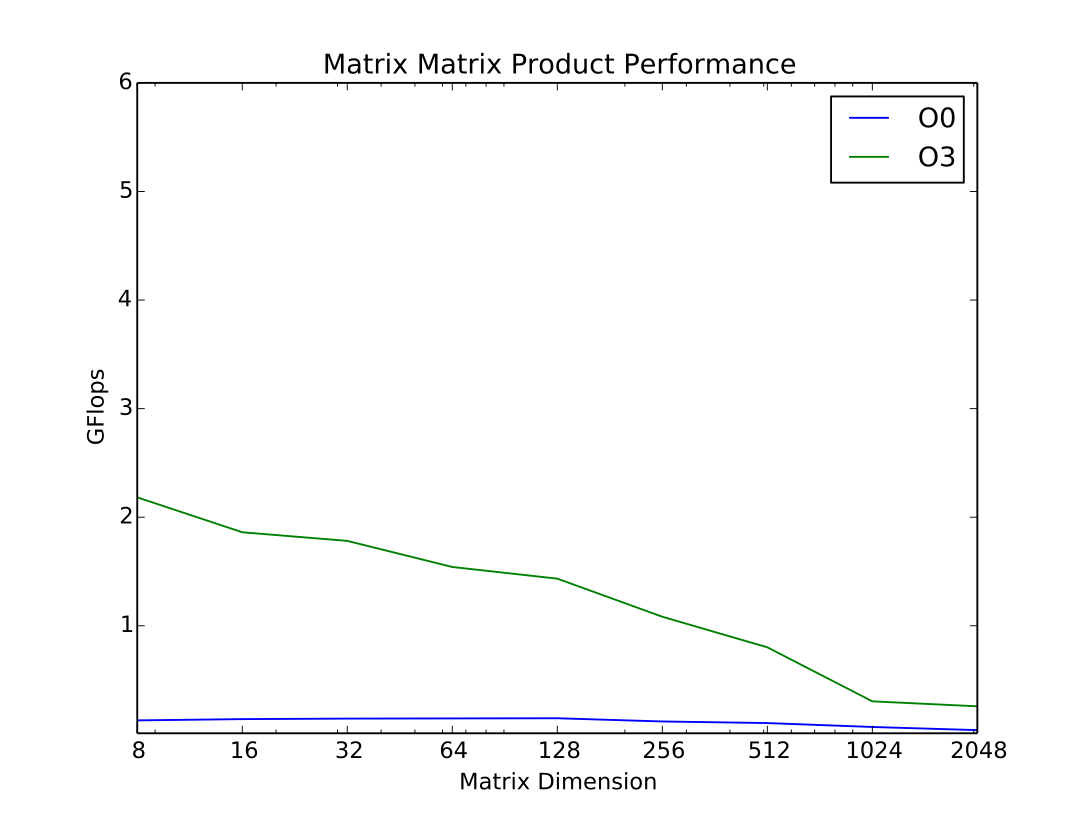

When we run matmat as is (without the reuse enhancing modifications), we see that for large problems we do in fact measure 0.4 GFlop/s.

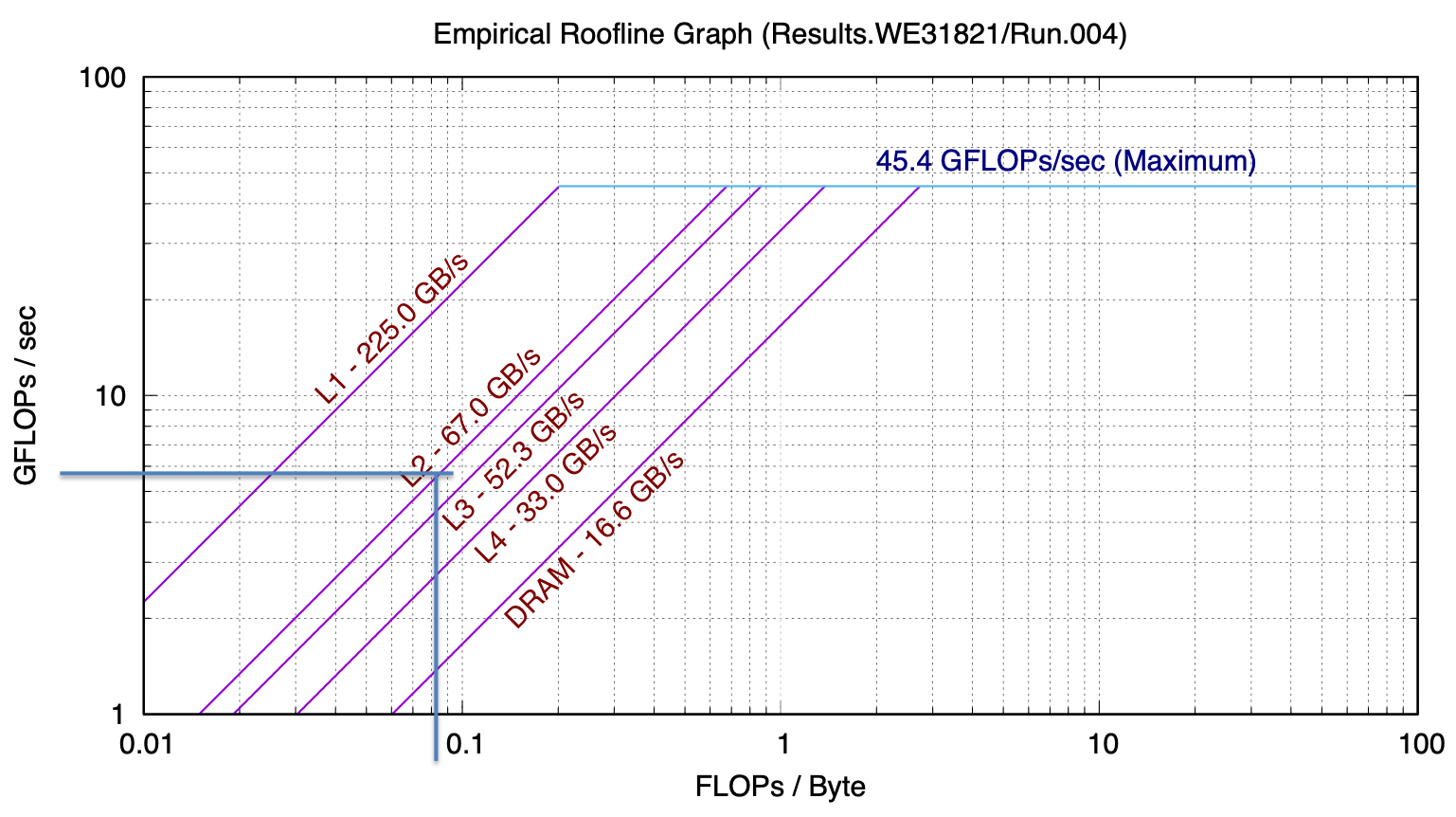

Let’s consider instead the case where we are making good use of cache and most of our problem is in L2 and we’ve applied some techniques for reuse. In that case, what we read off the chart is around 5 GFlop/s

Looking at the actual measured performance, for problem sizes that fit in L2 cache, we see that we achieve approximately 5 GFlops/s:

Strassen’s Algorithm¶

Finally we look at a couple of ways to work smarter (and to work less) in order to reduce time to solution.

We introduce a remarkable algorithm discovered by Volker Strassen (and which now bears his name): Strassen’s algorithm for computing matrix-matrix product with lower than \(N^3\) computational complexity.