Lecture 16: GPUs¶

(Recorded on 2022-05-19)

This lecture is a continuation of Lecture 15. We learn how to do GPGPU using cuda with some simple examples. We also introduce thrust library in this lecture.

CUDA¶

We give an overview of NVIDIA’s programming environment for their GPUs: CUDA as well as a C++ library interface Thrust.

Here are some basics about CUDA. Compute Unified Device Architecture (CUDA) was creatd by Nvidia in 2007. Now CUDA has become a parallel computing platform and application programming interface. CUDA can enable certain types of GPUs for GPGPU.

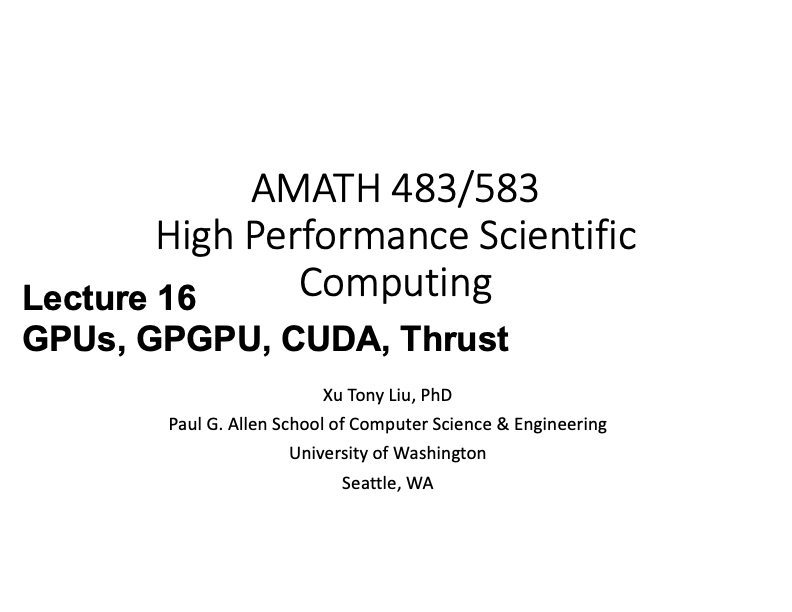

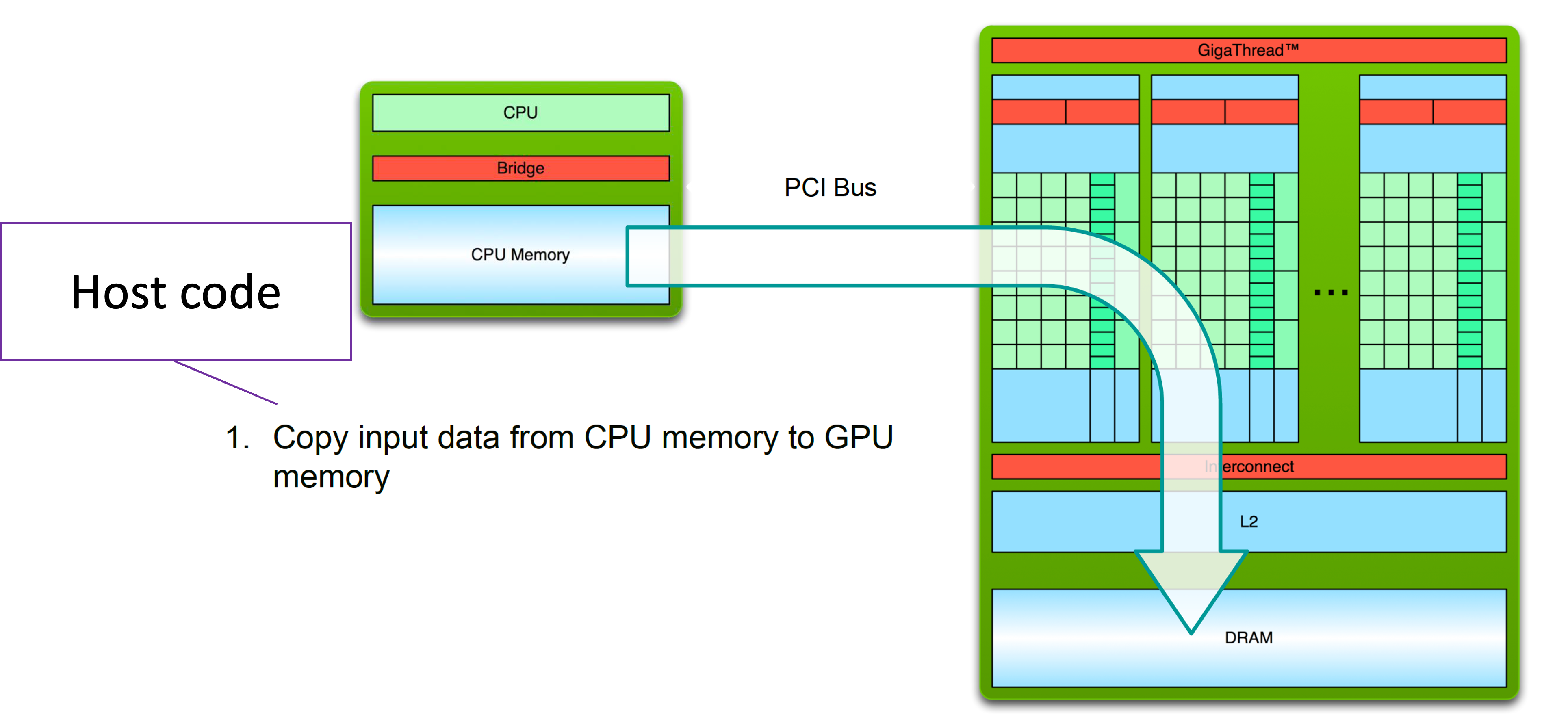

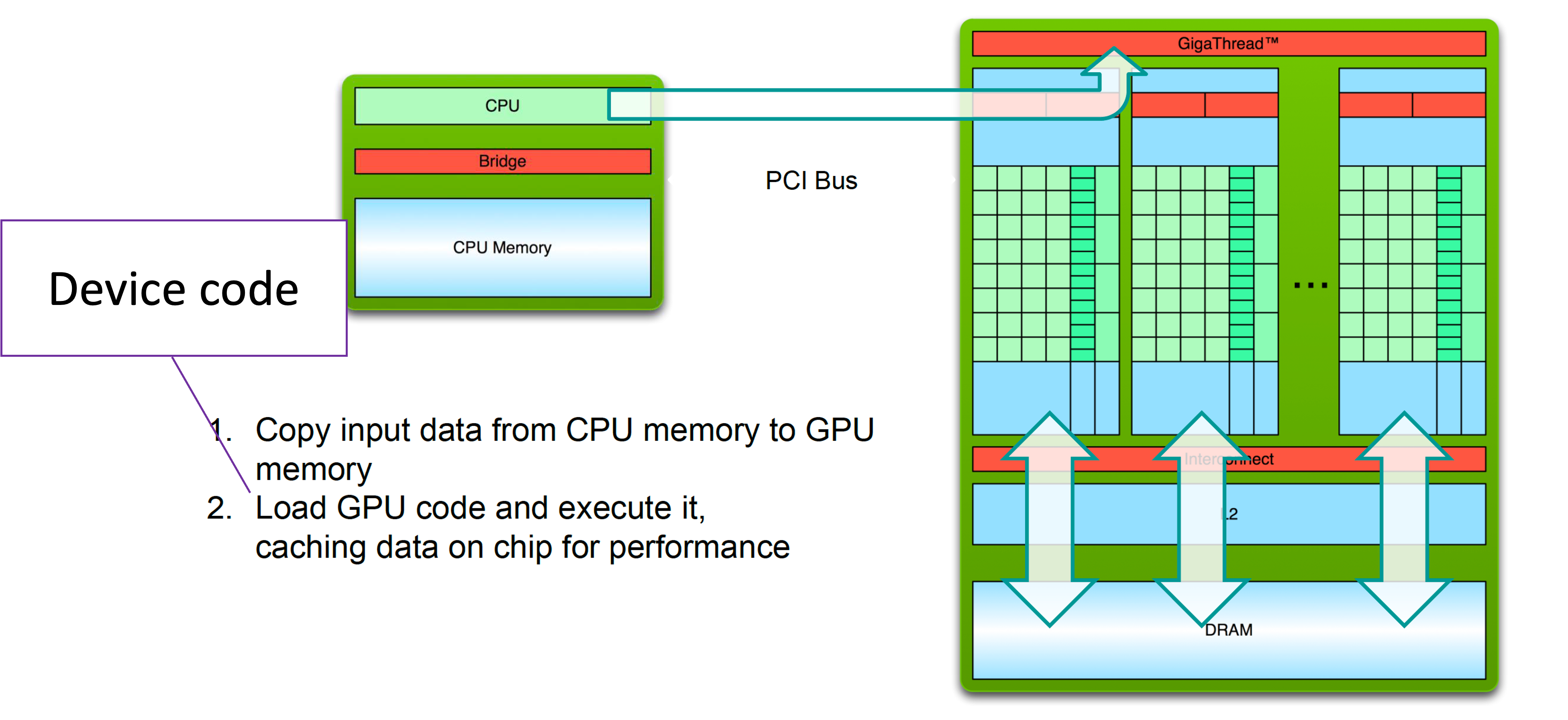

One important thing we must know is that GPU programming involves both host and device. The host includes CPU and its memory, which is known as the host memory. The device includes GPU and its memory, which is called device memory. Based on this architecture, GPU programming follows this simple processing flow.

Promgramming using CUDA¶

CUDA supports C/C++ and Fortran language. Let’s take a look at its C/C++ language extensions.

It provides several function execution space identifiers: __global__, __host__, __device__.

A fucntion execution space identifier decides whether a function executes on the host or on the device and whether it is callable from the host or from the device.

It also provides memory space specifiers:

__device__, __constant__, __shared__, managed__, __restrict__.

A memory space specifier declares the memory location on the device of a variable.

CUDA also provides various built-in vector types, which extends the standard C data types of length up to 4.

The vector types are provided to increace the bandwidth utilization.

CUDA also provides many built-in variables, and functions.

Complete information about cuda programming (including a best practices guide) can be found at CUDA toolkit documentation:.

Thrust¶

Thrust is a C++ template library for CUDA based on the standard template library (STL) in C++. It consits of several important components: vector containers, algorithms, iterators, etc.

The vector containers is a generic template container, which can store any data type.

It can be resize dynamically. Specifically, there are two vector containers:

thrust::host_vector<T> and thrust::device_vector<T>. The former stores in the host memory,

the latter stores in the GPU device memory.

We take a look at a few use cases of these components. More information about Thrust library can be found at Thrust API documentation.